A lightweight documentation system

Background

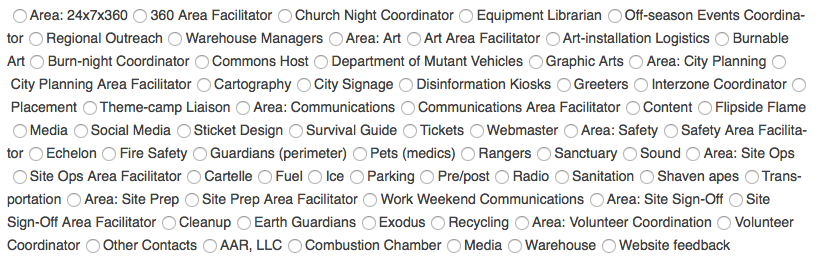

Not long after I took on my current role in AAR LLC, I inherited the task of producing the “binders” that the organization prints up every event cycle–basically, operations manuals for the event.

There was a fair amount of overlap between these binders, and I recognized that keeping that overlapping content in sync would become a problem. I studied documentation technologies and techniques, and learned that indeed, this is considered a Bad Thing, and that “single sourcing” is the solution–this would require that the binders be refactored into their constituent chapters, which could be edited individually, and compiled into complete documents on demand.

The standard technology for this is DITA, but that involves a lot of overhead. It would be hard for me to maintain by myself, and impossible to hand off to anyone else. What I’ve come up with instead is still a bit of a work in progress. It still has a bit of a tech hurdle to overcome–it does involve using the command line–but should be a lot more approachable.

The following may seem like a lot of work. It’s predicated on the idea that it will pay off by solving a few problems:

- You are maintaining several big documents that have overlapping content

- You want to be able to publish those documents in more than one format (web, print, ebook)

- You want to be able to update your materials easily, quickly, and accurately.

The following is Mac-oriented because that’s what I know.

Installation

Install Homebrew

Homebrew is a “package manager” for MacOS. If you’ve never used the command-line shell before, or have never installed shell programs, this is your first step. Think of it as an App Store for shell programs. This makes installing and updating other shell apps much easier

To install, open the Terminal app and paste in

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"Important: Don’t paste in shell commands you find on the Internet unless you know what you’re doing or you really trust me. But this is exactly what the nice folks at Homebrew will tell you to do.

Install Pandoc

Pandoc is a swiss-army knife tool for converting text documents from one form to another. In the Terminal app, paste in

brew install pandoc

Homebrew will chew on that for a while and finish.

Install GPP

GPP is a “generic preprocessor,” which means that it substitutes one thing for another in your files. In the Terminal app, paste in

brew install gpp

Again, Homebrew will chew on that for a while and finish.

Learning

Learn some shell commands

You’ll at least need to learn the cd and ls commands.

This looks like a pretty good introductory text.

Learn Markdown

Markdown was created by John Gruber to be a lightweight markup language–a way to write human-readable text that can be converted to HTML, the language of web pages. If you don’t already know the rudiments of HTML, the key thing to remember about it is that it describes the structure of a document, not its appearance. So you don’t say “I want to this line to be in 18-pt Helvetica Bold,” you say “I want this line to be a top-level heading.” How it looks can be decided later.

Since then, others have taken that idea and run with it. The author of Pandoc, John MacFarlane, built Pandoc to understand an expanded Markdown syntax that adds a bunch of useful features, such as tables, definition lists, etc. The most basic elements of Markdown are really easy to learn; it has a few less-intuitive expressions, but even those are pretty easy to master, and there are cheat-sheets all over the Internet.

Markdown is plain text, which means you can write it in anything that lets you produce text, but if you do your writing in MS Word (aside: please don’t), you need to make sure to save as a .txt file, not a .doc or .docx file. There are a number of editors specifically designed for Markdown, that will show a pane of rendered text side-by-side with what you’re typing; there’s even a perfectly competent online editor called Dillinger that you can use.

I’ve gotten to the point where I do all my writing in Markdown, except when required to use some other format for my work. There are a lot of interesting writing apps that cater to it, writing is faster, files are more portable and smaller.

Organization

Refactor files and mark them up

Getting your files set up correctly is going to be more work than any other part of this. You’ll need to identify areas of overlap, break those out into standalone documents, decide on the best version of those (assuming they’re out of sync), and break up the rest of the monolithic documents into logical chunks as well. I refer to the broken-up documents as “component files.”

Give your files long, descriptive names. For redundancy, I also identify the parent documents in braces right in the filename, eg radio_channel_assignments_{leads}_{gate}.md. Using underscores instead of spaces makes things a little easier when working in the shell. Using md for the dot-extension lets some programs know that this is a Markdown file, but you could also use txt.

Then you’re going to mark these up in Markdown. If your files already have formatting in MS Word or equivalent, you’re going to lose all that, and you’ll need to make some editorial decisions about how you want to represent the old formatting (remember: structure, not appearance). Again, this will be a fair bit of work, but you’ll only need to do it once, and it will pay off.

Organize all these component files in a single directory. I call mine sources.

Create Variables

This is optional, but if you know that there are certain bits information that will change regularly, especially bits that appear repeatedly throughout your documents, save yourself the trouble of finding and replacing them. Instead, go through your component files and insert placeholders. Use nomenclature that will be obvious to anyone looking at it, like THE_YEAR or FLAVOR_OF_THE_MONTH. You don’t need to use all caps, but that does make the placeholders more obvious. You cannot use spaces, so use underscores, hyphens, or camelCasing.

Now, create a document called variables.txt. Its contents should be something like this:

#define THE_YEAR 2018

#define FLAVOR_OF_THE_MONTH durian

…

And so on. Each of these lines is a command that GPP will interpret and will substitute the first term with the second. This lets you make all those predictable changes in one place. Save this in your sources directory.

You can get into stylistic problems if you begin a sentence with a placeholder that gets substituted with an uncapitalized replacement. There may be a good solution, but I haven’t figured it out. You should be able to write around this in your component docs.

Create BOMs

In order to rebuild your original monolithic documents from these pieces, you’ll want to create what I call a bill of materials (BOM) for each target document. This defines what the constituent component files are, and when you run GPP, the BOM tells GPP to assemble its output file from those component files.

I like to keep each BOM in a separate directory that’s at the same level as my sources directory (This gives me a separate directory to contain my output files.), so my directory tree looks like this:

My Project

gate

gate-bom.txt

leads

leads-bom.txt

sources

variables.txt

radio_channel_assignments_{leads}_{gate}.md

…

The contents of each BOM file will look something like this:

#include ../sources/variables.txt

#include ../sources/radio_channel_assignments_{leads}_{gate}.md

…Because the BOM file is nested in a directory adjacent to the sources directory, you need to “surface” and then “dive down” into the adjacent directory. The leading ../ is what lets you surface, and the sources/ is what lets you dive down into a different directory.

Compilation & conversion

So you’ve got your files refactored and marked up, you’ve got your variables set up, you’ve got your BOMs laid out. Now you want to get back what you had before. Now it’s time for the command line.

Open the Terminal app, type cd followed by a space, drag the folder containing the BOM file you want to compile into the Terminal window (this will insert the correct path), and hit “return”. Use the ls command to confirm that the only file you can see is the BOM file you want to compile.

Now it’s time to run the following command:

gpp source-bom.txt -o source.md

This says “tell GPP to read in the file source-bom.txt, run all the commands in it, and create an output file based on it called source.md”. Make whatever filename substitutions are appropriate. The output file will be in the same directory as the BOM file. This will be a big Markdown file that is assembled from all the component files in the BOM, with all the variable substitutions performed.

Now that you have a Markdown file, the world is your oyster. Some content-management systems can interpret Markdown directly. WordPress requires the Jetpack plugin, but that’s easily installed. So depending on how you’ll be using that document, you may already be partly done.

If you want to convert it to straight HTML, or to an MS Word doc, or anything else, now it’s time to run Pandoc. Again, in the Terminal app, type this in:

pandoc source.md -s -o source.html

This says “tell Pandoc to read in the file source.md and create a standalone (-s) output file called source.html”. Pandoc will create HTML files lacking headers and footers if you leave out the -s. It figures out what kind of output file you want from the dot-extension, and can also produce MS Word files and a host of others. It uses its own template files as the basis for its output files, but you can create your own template files and direct Pandoc to use those instead.

I do my print documents in InDesign, and Pandoc can produce “icml” files that InDesign can flow into a template. Getting the styles set up in that template takes some trial and error, but again, once you’ve got it the way you like it, you don’t need to mess with it again.

Shortcomings and prospects

The one thing this approach lacks is any kind of version control. In my case, I create a directory for every year, and make a copy of the source directory and the rest inside the year directory. This doesn’t give me true version control–I rely on Time Machine for that–but it does let me keep major revisions separate. Presumably using Git for my sources would give me more granular version control.

Depending on what your output formats are going to be, placed images can be a bother. I haven’t really solved this one to my satisfaction yet. You may want to use a PDF-formatted image for the print edition and a PNG-formatted image for the web; Pandoc does let you set conditionals in your documents, but I haven’t played with that yet.

In fact, I haven’t really scratched the surface of everything that I could be doing with GPP and Pandoc, but what I’ve documented here gives me a lot of power. I’ve also recently learned of a different preprocessor called Panda, which subsumes GPP and can also turn specially formatted text into graphical diagrams using other shell programs, such as Graphviz. I’m interested in exploring that.